Yes, AXYS is purpose-built to minimize LLM and OpenAI costs for your organization. By leveraging proprietary Retrieval Augmented Generation (RAG) workflows and intelligent data filtering, AXYS reduces the amount of data sent to AI models—cutting token usage by up to 98.8 percent compared to industry averages. AXYS also provides built-in real-time tracking and monitoring tools so you can see exactly how many tokens are being used for each query. This transparency helps you optimize prompt design, monitor usage, and control costs efficiently as your AI adoption grows.

Latest

From the blog

The latest industry news, interviews, technologies, and resources. View all postsTop 5 Checklist for Enterprise Search and Data Fabric Integration

In today’s fast-paced business environment, businesses need to be able to quickly and easily access essential data. One of the...

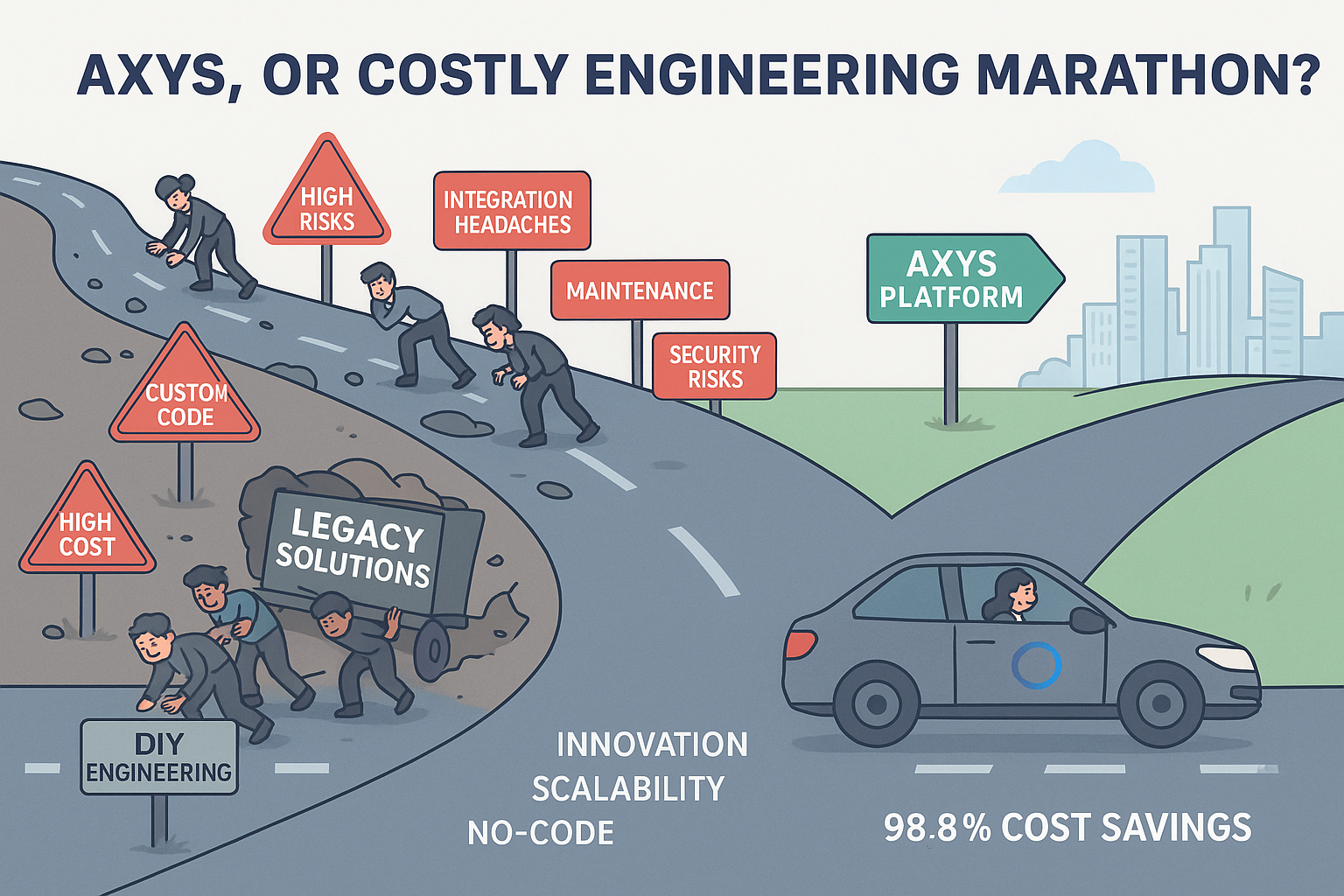

AXYS, Or Costly Engineering Marathon?

Why settle for complexity? There’s only one platform that unifies MCP, A2A, RAG, and more—with no code, no hassle, and...

Unlocking AI Agent Adoption: How AXYS Empowers Businesses to Scale AI with Confidence

AI Agent adoption in business has the potential to revolutionize everything from automation to real-time decision-making. But for most companies...

Still thinking about it?

By submitting this form, you agree to our privacy policy.