The Business data is important for several reasons. It can help organizations make informed decisions, identify trends and patterns, and measure performance. It can also forecast future outcomes, optimize operations, and personalize customer experiences. By collecting and analyzing data, businesses can gain valuable insights that can help them improve their products, services, and overall operations.

Additionally, business data can be used to track progress and measure the success of various initiatives and projects. Overall, having accurate and up-to-date business data is crucial for any organization that wants to make informed, data-driven decisions.

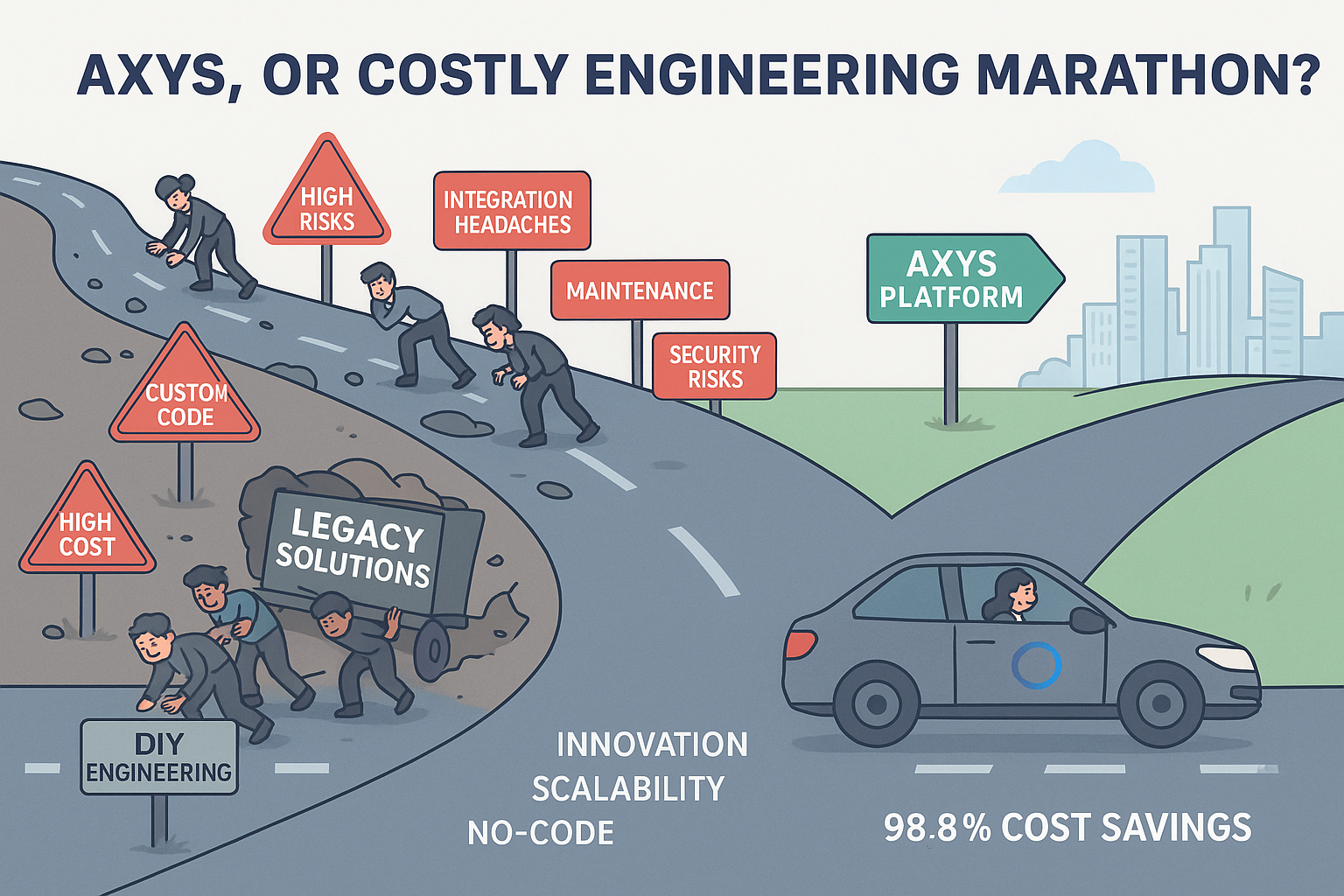

Simplify your Business Data by using Axys No-Code Solution

Here are five steps you can take to simplify your business data by using Axys No-Code Solution:

Identify the Data you Need

Begin by identifying the most important data to your business operations. This will help you focus on the most relevant data and avoid collecting and storing unnecessary data.

Standardize Data Formats

If you use multiple systems or applications to store data, ensure the data is stored in a consistent format. This will make it easier to integrate and analyze the data later on.

Implement Data Governance Policies

Establish clear policies and procedures for managing and using data within your organization. This will help ensure that data is used appropriately and that there is a clear chain of custody.

Use Data Management Tools

Consider using tools such as data lakes, data warehouses, or data integration platforms to manage and integrate your data. These tools can help you organize and access your data more efficiently.

Regularly Review and Clean your Data

Make sure to regularly review and clean your data to ensure that it is accurate and up to date. This can help you avoid making decisions based on outdated or incorrect information.

Techniques to Simplify your Business Data by using Axys No-Code Solution

Several techniques can be used to simplify your business data by using Axys No-code solution:

Aggregation

This involves reducing the granularity of the data by grouping similar data points and taking an aggregate measure such as the mean or sum.

What does Data Aggregation Means?

Data aggregation is one of those terms that people in the marketing world can be intimidated by. Aggregation is something that’s been around for probably 20 years. It allows individuals to have their financial data consolidated into one place. It provides a lot of ease and simplicity in managing users’ personal financial information.

Data Grouping and Aggregation

The Data aggregation essentially consists of 2 steps:

- Data Grouping

- Data Aggregation

Data Grouping identifies one or more data groups based on values in selected features.

Data Aggregation puts together (aggregates) the values in one r more selected columns for each group.

Sampling

This involves selecting a subset of the data to work with rather than using the entire dataset. This can be useful when the full dataset is too large to work with or when the patterns in the sample represent the patterns in the full dataset.

Sampling is often used in statistical analysis and machine learning to estimate the characteristics of a population. Several different sampling techniques can be used, including random, stratified, and cluster. It is important to choose an appropriate sampling method and to ensure that the sample is representative of the full dataset to avoid bias in the results.

Data cleansing

This involves identifying and correcting errors or inconsistencies in the data. This can include filling in missing values, correcting typos, and standardizing data formats.

What is Data Cleansing?

The data cleansing, also known as data cleaning, is the process of identifying and correcting errors or inconsistencies in data. Data cleansing is an important step in the data preparation process because errors or inconsistencies in the data can lead to incorrect or misleading results.

Data cleansing can involve various tasks, such as filling in missing values, correcting typos and other errors, standardizing data formats, and identifying and removing duplicate data. It is often necessary to perform data cleansing when working with large, complex datasets collected from multiple sources, as these datasets may contain various errors and inconsistencies.

Data transformation

This involves changing the data into a different format or structure more suitable for the task. Examples include normalizing data, scaling data, and applying mathematical transformations.

Normalization: This involves scaling the data to a common range, such as [0, 1] or [-1, 1]. Normalization is often used when the scale of the data varies significantly and could impact the performance of a machine-learning algorithm.

Standardization: This involves scaling the data with a mean of 0 and a standard deviation of 1. Standardization is often used when the data follows a Gaussian distribution, and the scale of the data is important.

Aggregation: This involves reducing the granularity of the data by grouping similar data points and taking an aggregate measure such as the mean or sum.

Filtering: This involves selecting a subset of the data based on certain criteria. Filtering can also helps to remove noise or irrelevant data from the dataset.

Derivation: This involves creating new features or attributes based on existing data. This can drive through binning, encoding, or generating polynomial features.

Data reduction

This involves eliminating unnecessary data points or features that do not contribute to the analysis or model. This can drive through feature selection techniques such as removing correlated features or using a dimensionality reduction algorithm.

Several techniques can helps in data reduction:

Feature selection: This involves selecting a subset of the most relevant features for the analysis or model. Feature selection can be made manually by selecting features based on domain knowledge or statistical analysis or automatically using algorithms that evaluate the relevance of each feature.

Dimensionality reduction: This involves reducing the number of dimensions or features in the data through principal component analysis (PCA) or linear discriminant analysis (LDA). Dimensionality reduction can be useful for reducing the complexity of the data and improving the performance of machine learning algorithms.

Data compression: This involves reducing the size of the data by eliminating redundancy or unnecessary data points. The data compression can be lossless, meaning that the original data is recoverable exactly, or lossy, meaning that some information is lost in the compression process.

Data aggregation: This involves reducing the granularity of the data by grouping similar data points and taking an aggregate measure such as the mean or sum. Aggregation can simplify the data and make it easier to work with.