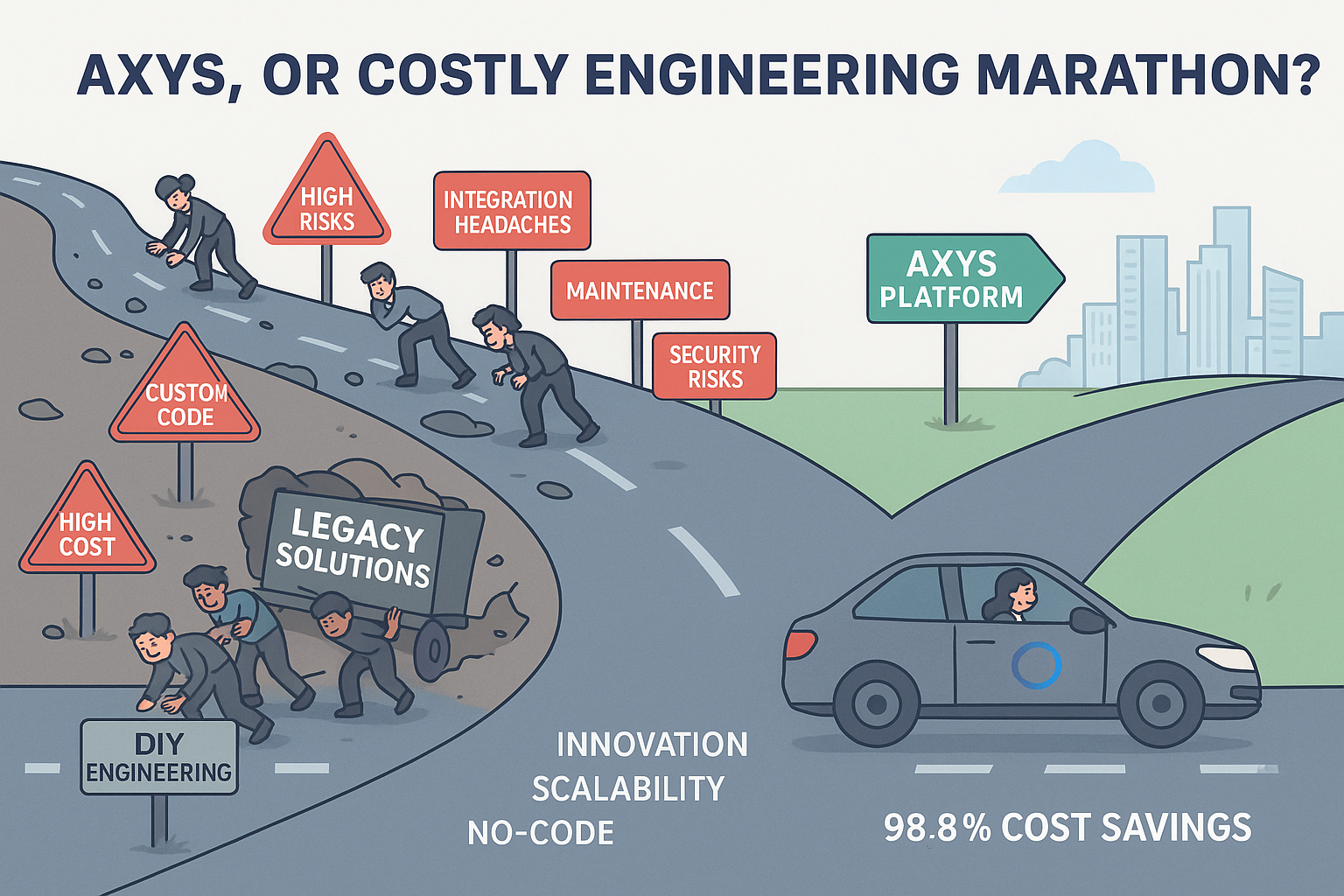

Enterprise AI has become indispensable, yet deploying models like OpenAI’s ChatGPT at scale presents substantial challenges. Chief among these issues are escalating API costs, limited memory, and the persistent problem of context loss in extended conversations for those relying on RAG only. At AXYS, we’ve tackled these challenges head-on, achieving groundbreaking savings of up to 98.8% in typical OpenAI API costs while simultaneously boosting performance by nearly 90%.

![]()

Slashing OpenAI Costs by 98.8%—The AXYS AI Chat Advantage

Large Language Models (LLMs), despite their impressive capabilities, struggle significantly with memory limitations. They lack real-time, persistent access to enterprise knowledge, causing costly inefficiencies:

- Context Loss: In longer interactions, LLMs forget earlier inputs, resulting in repetitive and inaccurate responses.

- Expensive API Calls: Repeatedly fetching previously processed data balloons API expenses.

- Outdated or Inaccurate Data: Without continuous access to current enterprise data, LLMs risk providing outdated or irrelevant answers.

AXYS fundamentally redefines how enterprises integrate AI by serving as the long-term memory layer that LLMs desperately need. Our platform provides AI with real-time retrieval, reference, and reuse of enterprise data, effectively eliminating context-related issues.

![]()

Breaking the Limits of LLMs with Persistent AXYS AI Memory

Here’s how AXYS delivers transformative results:

- Unified Data Layer: AXYS consolidates structured and unstructured data across documents, databases, SaaS applications, and APIs. By centralizing enterprise knowledge, we eliminate costly redundant queries, dramatically improving efficiency.

- Real-time Retrieval-Augmented Generation (RAG): AXYS empowers AI agents with immediate access to precise business data, significantly enhancing response accuracy and relevance, ensuring each interaction leverages the most current information.

- Optimized Token Efficiency: Our proprietary approach significantly reduces unnecessary API calls by pre-processing and structuring AI queries. AXYS minimizes token usage, enabling enterprises to slash OpenAI API costs by as much as 98.8%.

- Security and Compliance: By governing data access, AXYS ensures that sensitive enterprise information remains secure, compliant, and fully within organizational control.

Why is AXYS essential for enterprise AI?

- Persistent AI Memory: Unlike typical LLM setups, AXYS ensures your AI never loses context, maintaining accuracy across extensive workflows.

- No More AI Hallucinations: By providing trusted enterprise data at every query, AXYS eliminates hallucinations, ensuring that AI responses remain accurate, relevant, and reliable.

- Cost Efficiency: With AXYS, enterprises benefit from exceptional cost efficiency. Our platform optimizes API usage, reducing the financial burden of AI deployment while boosting performance.

- Seamless Integration: AXYS easily connects with OpenAI, ChatGPT, and custom AI agents, integrating smoothly with existing business processes and data sources.

![]()

Affordable AI at Scale—AXYS Optimizes Token Efficiency

Our customers are experiencing these advantages firsthand. With AXYS, they’re achieving near 90% faster response times due to streamlined data access and query optimization. These significant efficiency gains translate directly into greater productivity, lower operational costs, and enhanced competitive advantage.

AXYS doesn’t merely reduce expenses—it transforms how enterprises leverage AI. With our platform, costly context loss, redundant API calls, and inaccuracies become problems of the past. Instead, companies gain an AI that remembers, understands, and delivers precision at scale.

AXYS gives your AI a memory—so it never forgets critical business knowledge. Enterprise AI breaks without memory; AXYS ensures your AI accesses, retains, and recalls data in real-time. No more limits. No more costly forgetfulness.

AXYS: the future of intelligent, efficient, and cost-effective enterprise AI.

Frequently asked questions

Everything you need to know about AXYS Platform

AI, LLM, Prompting, and Search

Pricing and Licensing

AI, LLM, Prompting, and Search

Yes, AXYS is purpose-built to fully support Retrieval Augmented Generation (RAG) workflows for your AI agents. The platform provides pre-built, proprietary RAG pipelines that are ready to use out of the box, enabling your agents to instantly retrieve and leverage relevant data, structured or unstructured, for more accurate and contextual responses. With AXYS’s intuitive, no-code interface, you can easily connect, organize, and structure all your data sources, set up RAG pipelines without any custom development, and customize every aspect of the workflow. This includes selecting data sources, filtering data, designing prompts, and formatting outputs to fit your specific business needs. By optimizing each query, AXYS enhances the quality of AI-generated responses while also reducing token usage and operational costs for large language models.

AXYS is engineered to dramatically reduce the cost and complexity of AI and LLM usage in your organization. By leveraging advanced retrieval augmented generation (RAG) workflows and intelligent data orchestration, AXYS delivers only the most relevant and concise information to AI models. This targeted approach achieves up to 98.8 percent reduction in token usage, significantly lowering OpenAI and LLM operational costs while improving performance. AXYS automates the process of preparing, filtering, and structuring your data before sending it to AI, ensuring efficient, cost-effective queries and faster results—without sacrificing data accuracy or security.

AXYS provides a no-code, guided approach to AI prompting and model integration. The platform analyzes your connected data schemas and suggests optimized prompts for more accurate AI results. You can fine-tune prompts and model interactions with intuitive controls—no engineering needed. AXYS even uses its own AI to recommend better prompts and streamline model integration, making it easy to leverage the full power of OpenAI and other LLMs for your business workflows.

Yes, AXYS fully supports asking statistical questions from your data using large language models (LLMs) like OpenAI. The platform enables you to analyze both structured and unstructured data, generate summaries, identify trends, and extract insights through natural language queries. This makes it easy for anyone to perform complex statistical analysis and reporting without the need for coding or specialized data skills.

Pricing and Licensing

Yes, AXYS provides real-time visibility into your OpenAI token usage directly within the platform. You can monitor token consumption for each query, track historical usage patterns, and quickly identify cost drivers as you interact with your data. This transparency helps you manage your AI budget, optimize prompt efficiency, and ensure you always have full control over OpenAI and LLM costs.

Yes, AXYS is purpose-built to minimize LLM and OpenAI costs for your organization. By leveraging proprietary Retrieval Augmented Generation (RAG) workflows and intelligent data filtering, AXYS reduces the amount of data sent to AI models—cutting token usage by up to 98.8 percent compared to industry averages. AXYS also provides built-in real-time tracking and monitoring tools so you can see exactly how many tokens are being used for each query. This transparency helps you optimize prompt design, monitor usage, and control costs efficiently as your AI adoption grows.

AXYS is built with its own proprietary Retrieval Augmented Generation (RAG) technology, designed from the ground up to dramatically reduce token usage and operational costs with OpenAI and other large language models (LLMs). By intelligently filtering and delivering only the most relevant, context-rich data to each AI query, AXYS minimizes token consumption—often resulting in up to 98.8 percent cost savings. The platform also offers real-time token usage tracking and optimization tools, giving you complete visibility and control as your AI adoption grows. This advanced approach makes AXYS one of the most efficient and cost-effective solutions for managing AI and LLM costs.