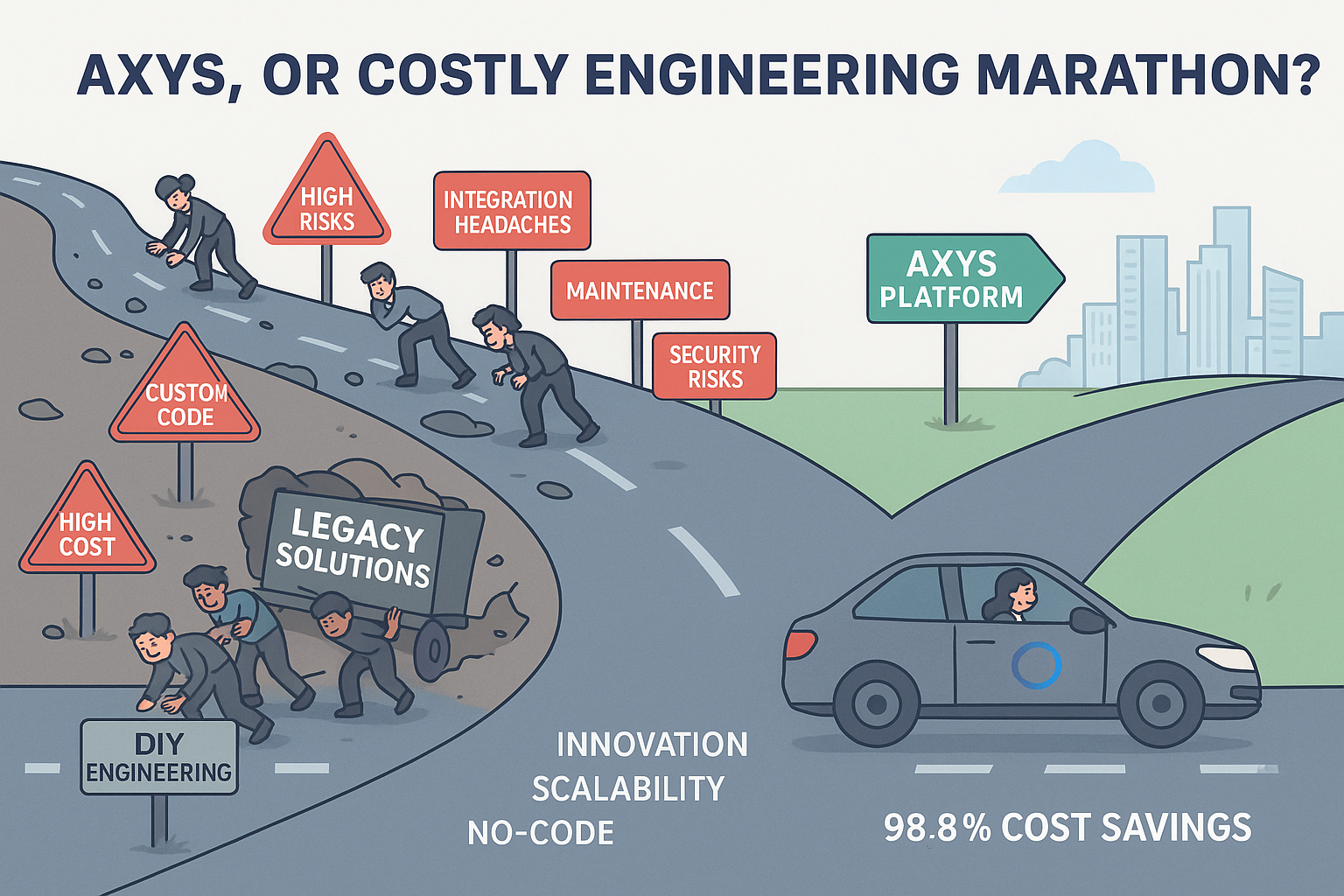

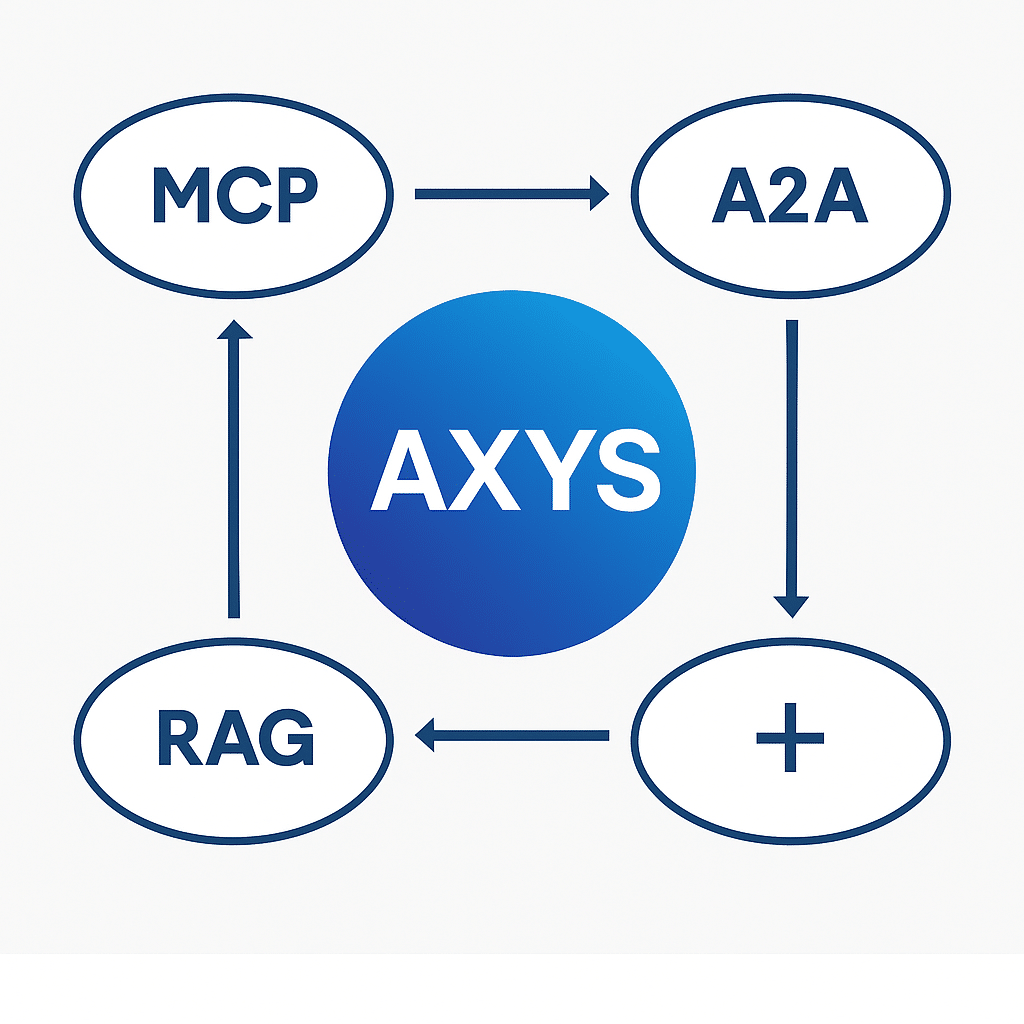

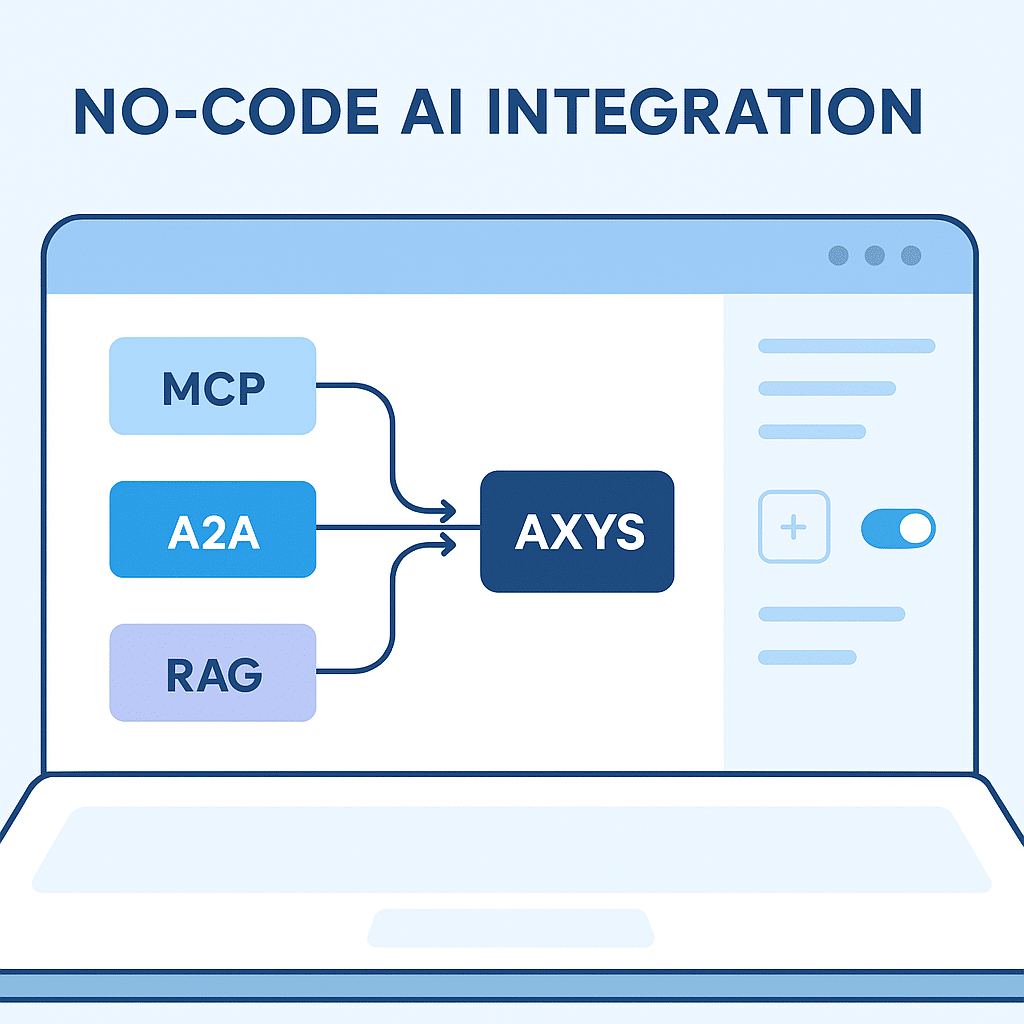

Why settle for complexity? There’s only one platform that unifies MCP, A2A, RAG, and more—with no code, no hassle, and at a fraction of the cost.

![]()

AXYS unifies MCP, A2A, RAG, and more—no code required, no engineering marathon.

The emergence of agentic AI and orchestration protocols like MCP (Model Context Protocol), A2A (Agent-to-Agent), and RAG (Retrieval-Augmented Generation) marks a new era of intelligent automation. Each of these technologies addresses critical pieces of the enterprise AI puzzle—standardizing connectivity, enabling agent collaboration, and infusing LLMs with live data. However, real-world implementation reveals significant operational, integration, and cost barriers when attempting to combine these approaches. There is currently no platform on the market that delivers a unified, no-code, all-in-one solution to these challenges. AXYS redefines this landscape by seamlessly integrating these protocols (and more), providing companies with radically simplified, scalable, and cost-effective AI orchestration under one roof, at a fraction of the cost of hiring a single engineer, rather than a team of 10 to 15 engineers.

Understanding the Technologies

MCP (Model Context Protocol)

MCP is a set of standards and protocols that enable AI agents to connect and communicate with external tools, data sources, and APIs in a consistent way. It was designed to eliminate the chaos of custom-coded integrations and make it easier for agents to “discover” and use resources dynamically. MCP by itself is not a product, but a protocol—there’s no out-of-the-box system to connect, orchestrate, and build applications on top of MCP endpoints.

A2A (Agent-to-Agent)

A2A provides standardized communication and task delegation between multiple AI agents. As more enterprises rely on networks of specialized agents, A2A aims to make agent collaboration discoverable and manageable—yet it still leaves gaps in payload/schema transparency, centralized security, and practical integration for business outcomes.

RAG (Retrieval-Augmented Generation)

RAG brings current and proprietary enterprise data into the context window of LLMs, typically using embedding and vector search. This pattern enhances answer quality, accuracy, and context, but managing embeddings, keeping them up to date, and avoiding direct database exposure introduces complexity, resource overhead, and security risks.

The Challenge of “More”

All of these technologies are powerful when used alone. However, real-world solutions require much more:

- No-code/low-code interfaces

- Seamless orchestration

- Secure, compliant workflows

- Flexible, extensible connectors

- Instant “plug and play” for both new and legacy resources

- Transparent cost and usage optimization

No single technology addresses these needs holistically.

Key Problems and Issues with Current Approaches

Fragmentation and Complexity

- Custom Integrations Everywhere: Each new tool or agent typically requires a custom adapter, leading to code duplication, brittle connections, and massive maintenance overhead.

- No Unified Platform: MCP, A2A, and RAG are protocols and reference architectures, not deployable products. No system exists to manage the entire workflow—from source connection to application delivery—without deep engineering.

Lack of Standardization and Dynamic Discovery

- Siloed Solutions: Without standardization, the discovery of new tools and agents is hard-coded and inflexible.

- Manual Management: Developers are left to define schemas, handle payload changes, and update documentation, slowing innovation and increasing error risk.

Security, Scalability, and Performance Issues

- Data Access Risks: Directly exposing databases to agents or teams can overload production systems or create dangerous security exposures.

- Latency and Orchestration Bottlenecks: Multi-hop agent/tool/data workflows add latency, while embedding updates and change management introduce operational friction.

Hidden and Rising Costs

- Token Usage and API Expenses: LLM token and data movement costs can spiral out of control—there’s little built-in visibility or optimization.

- Engineering and Maintenance Overhead: Each new integration or agent increases cloud, infrastructure, and human resource costs.

Challenges of Combining All These Technologies

- Operational Complexity: Running MCP, A2A, and RAG together is not a “platform”— it’s a patchwork.

- Change Management Nightmares: Changes in any layer (schema, API, agent) can cascade and break workflows, requiring coordinated engineering releases.

- Lack of Pluggability: No easy way to “plug in” new capabilities, share resources, or instantly compose workflows.

![]()

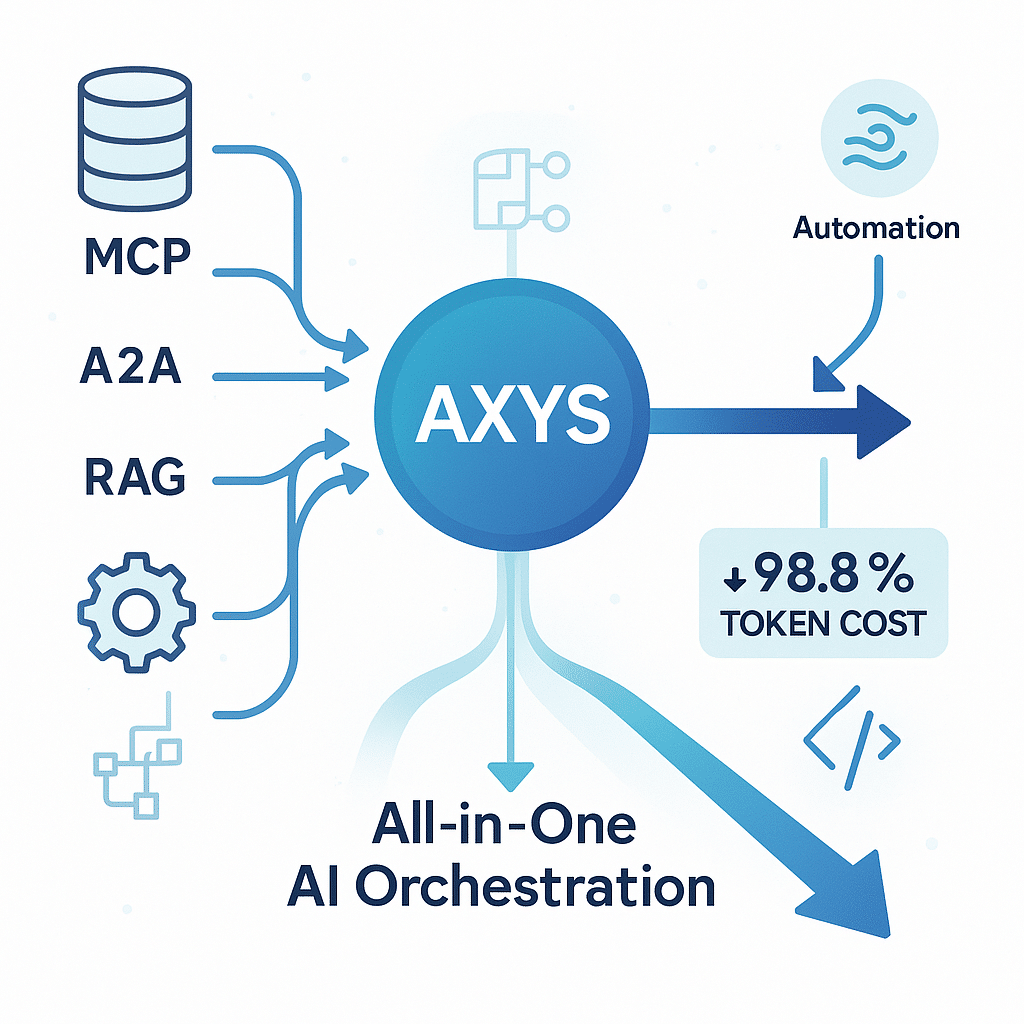

Plug in any data source, agent, or tool and orchestrate AI workflows instantly—with radical cost savings.

AXYS: The Only All-in-One Solution

AXYS is built to eliminate every one of these barriers:

- Seamless Integration: Instantly connects to any MCP server, external API, agent, or data source—legacy or modern—via unified, auto-generated connectors.

- No-Code/Low-Code Orchestration: Business users and technical teams can build, configure, and evolve workflows visually—no engineering bottlenecks.

- Plug-and-Play Extensibility: Any new tool, API, or agentic capability can be added, composed, and reused across solutions in minutes.

- Automated Data Harmonization: AXYS ensures that enterprise data is always fresh, harmonized, and securely available to LLMs—without manual embedding or risky direct DB access.

- Security and Compliance by Design: Centralized, auditable controls protect every workflow, data connection, and agent interaction.

- Radical Cost Reduction: Built-in token optimization and orchestration deliver up to 98.8% reduction in LLM token costs, with near-zero integration overhead.

- Effortless Change Management: All connectors, schemas, and workflows are managed centrally—AXYS absorbs changes so your business doesn’t break.

Production-Proven Scale: Already live in demanding environments, AXYS enables unlimited agentic AI without the headaches.

| Issue / Challenge | MCP | A2A | RAG | AXYS Solution (All-in-One) |

| Not a Product (Just Protocols/Patterns) | Protocol only, not a turnkey solution | Protocol only, not a product | A method, not a full product | Complete no-code/low-code, out-of-the-box platform—production-ready |

| Requires Custom Integrations | Yes—each tool/source needs custom adapter | Yes—each agent needs custom API/workflow | Yes—embedding pipelines, search APIs, etc. | All integrations auto-generated, managed centrally; plug-and-play connectors |

| No Unified Management/Orchestration | No unified dashboard or orchestration | No unified management across agents | No central workflow management | Single dashboard to orchestrate, manage, and monitor everything |

| Manual Schema & Resource Discovery | Manual resource/schema management | Limited or no schema discovery | Manual embedding/schema mapping | Auto-discovers resources, schemas, agents; catalogs everything for instant use |

| Difficult to Scale/Extend | Scaling means more code | Adding agents increases complexity | Scaling data/embeddings is manual | Instantly scales—just add sources/agents; extensible by design |

| Security & Compliance | Security depends on each custom integration | Security protocols still evolving | Data exposure risks, little isolation | Enterprise-grade security, audit, access controls across all workflows |

| Performance & Latency | Dynamic orchestration can be slow | Multi-agent flows add latency | Embedding refresh/adds operational lag | Optimized for speed with caching, batching, and smart orchestration |

| High Cost (Tokens/Engineering) | No built-in cost controls; engineering intensive | Higher ops cost as agents multiply | Embedding/query costs can balloon | Cuts token costs by up to 98.8%; minimizes engineering headcount/cost |

| Maintenance Overhead | Updating integrations = repeated work | Each agent/workflow must be updated separately | Embedding & pipeline maintenance is manual | All maintenance managed centrally; one update applies platform-wide |

| No Plug-and-Play Extensibility | New resources = new code | New agent = new integration | New data = new pipelines | Plug-and-play for any source, agent, or tool; instant reuse and composition |

| No End-to-End Workflow Automation | Stops at connection, not business outcome | Stops at agent communication | Stops at data retrieval | Full workflow from data connection to AI-powered business outcome—all under one roof |

![]()

Finally, a single platform that lets you build and scale enterprise AI without the complexity.

Summary

MCP, A2A, and RAG each bring unique strengths to AI-driven business automation, but combining them is a recipe for operational complexity, security risk, and escalating costs. There is currently no other platform that unifies these protocols—and all the “must-haves” of modern orchestration—under one roof, especially not with a no-code interface and near-instant extensibility.

AXYS is the answer: a truly production-ready, all-in-one AI orchestration platform that enables businesses to focus on their solutions, not the plumbing, for less than the price of a single engineer. With AXYS, your enterprise can unlock agentic AI at scale, fully integrated, radically simplified, and future-proof.

Frequently asked questions

Everything you need to know about AXYS Platform

AI, LLM, Prompting, and Search

Integration and Connectivity

Pricing and Licensing

AI, LLM, Prompting, and Search

Yes, AXYS is purpose-built to fully support Retrieval Augmented Generation (RAG) workflows for your AI agents. The platform provides pre-built, proprietary RAG pipelines that are ready to use out of the box, enabling your agents to instantly retrieve and leverage relevant data, structured or unstructured, for more accurate and contextual responses. With AXYS’s intuitive, no-code interface, you can easily connect, organize, and structure all your data sources, set up RAG pipelines without any custom development, and customize every aspect of the workflow. This includes selecting data sources, filtering data, designing prompts, and formatting outputs to fit your specific business needs. By optimizing each query, AXYS enhances the quality of AI-generated responses while also reducing token usage and operational costs for large language models.

AXYS is engineered to dramatically reduce the cost and complexity of AI and LLM usage in your organization. By leveraging advanced retrieval augmented generation (RAG) workflows and intelligent data orchestration, AXYS delivers only the most relevant and concise information to AI models. This targeted approach achieves up to 98.8 percent reduction in token usage, significantly lowering OpenAI and LLM operational costs while improving performance. AXYS automates the process of preparing, filtering, and structuring your data before sending it to AI, ensuring efficient, cost-effective queries and faster results—without sacrificing data accuracy or security.

Yes, AXYS is fully compatible with Model Context Protocol (MCP) and modern agentic AI frameworks. Every AXYS connector can be exposed as an MCP-compatible endpoint, making it easy to integrate AXYS-powered data orchestration with any MCP-compliant agent or AI system. This ensures seamless interoperability, faster AI solution development, and out-of-the-box support for the latest agentic AI architectures. Visit our product page about MCP and AI-Agents.

AXYS offers a no-code approach to AI prompting and model integration, making it simple for any user to connect data and interact with AI models. The platform intelligently analyzes your connected data schemas and suggests optimized prompts tailored to your unique data structure, helping you get more accurate and relevant results from AI models like OpenAI. AXYS can even use its own built-in AI to refine or enhance your prompts, automatically generating the most effective questions and responses for your use case. This seamless process allows businesses to integrate powerful AI and LLM capabilities without manual coding, complex engineering, or guesswork—accelerating AI adoption and improving outcomes.

Integration and Connectivity

Adding or removing data connectors in AXYS is a simple, no-code process. You only need to provide your authentication credentials, and AXYS automatically connects to your data source, verifies the integration, and continuously monitors the connection for health and reliability. No engineering or manual setup is required, so you can easily manage all your connectors from a user-friendly interface. This makes it quick and effortless to update, expand, or streamline your data integrations as your business needs change.

AXYS comes ready to connect with a wide range of popular databases, file storage services, SaaS applications, and cloud platforms. Out of the box, AXYS supports connectors for Microsoft Azure, AWS RDS and S3, MySQL, Google Workspace, SharePoint, Salesforce, Jira, QuickBooks, HubSpot, BambooHR, Okta, FreshDesk, Google Analytics, Authorize.net, PayPal, Constant Contact, CSV and Excel files, and more. If you have a source not currently listed, AXYS can easily add new connectors based on customer needs or popular demand, ensuring compatibility with virtually any business system.

Pricing and Licensing

Yes, AXYS is purpose-built to minimize LLM and OpenAI costs for your organization. By leveraging proprietary Retrieval Augmented Generation (RAG) workflows and intelligent data filtering, AXYS reduces the amount of data sent to AI models—cutting token usage by up to 98.8 percent compared to industry averages. AXYS also provides built-in real-time tracking and monitoring tools so you can see exactly how many tokens are being used for each query. This transparency helps you optimize prompt design, monitor usage, and control costs efficiently as your AI adoption grows.

AXYS is built with its own proprietary Retrieval Augmented Generation (RAG) technology, designed from the ground up to dramatically reduce token usage and operational costs with OpenAI and other large language models (LLMs). By intelligently filtering and delivering only the most relevant, context-rich data to each AI query, AXYS minimizes token consumption—often resulting in up to 98.8 percent cost savings. The platform also offers real-time token usage tracking and optimization tools, giving you complete visibility and control as your AI adoption grows. This advanced approach makes AXYS one of the most efficient and cost-effective solutions for managing AI and LLM costs.